Let’s go back to the start of AI and Regulation. In November 2022 we had ChatGPT launch, which caused a load of hype and interest in AI. Let’s step through some of the important UK government reports about AI which could have an impact on social housing providers.

UK government’s AI Regulation White Paper of August 3, 2023 (the “White Paper”) and its written response of February 6, 2024 were perhaps the first position papers. The key thing here? There likely won’t be a central regulator for AI. Some government-recommended principles for all Regulators:

- Principle 1: Regulators should ensure that AI systems function in a robust, secure, and safe way throughout the AI life cycle, and that risks are continually identified, assessed and managed.

- Principle 2: Regulators should ensure that AI systems are appropriately transparent and explainable.

- Principle 3: Regulators should ensure that AI systems are fair (i.e., they do not undermine the legal rights of individuals or organizations, discriminate unfairly against individuals, or create unfair market outcomes).

- Principle 4: Regulators should ensure there are governance measures in place to allow for effective oversight of the supply and use of AI systems, with clear lines of accountability across the AI life cycle

- Principle 5: Regulators should ensure that users, impacted third parties and actors in the AI life cycle are able to contest an AI decision or outcome that is harmful or creates a material risk of harm, and access suitable redress.

Next we have the AI opportunities action plan (Jan 2025). This positions the UK government’s approach to AI, and was much promoted in the media. In terms of Housing, there are a few key recommendations: That the government “Commit to funding regulators to scale up their AI capabilities, some of which need urgent addressing”, and potentially the most exciting suggestion for Housing is “Require all regulators to publish annually how they have enabled innovation and growth driven by AI in their sector.”. The report also contains an implementation framework, suggesting an approach to AI is: Scan > Pilot > Scale. I’d say Housing as a sector is somewhere between Scan and Pilot at present, and varying wildly by individual organisation. An interesting part of this will be the Regulator for Social Housing’s future position on AI for the sector – something not yet published (many Regulators haven’t, this isn’t odd).

Another newsworthy article, in Feb 2025 the UK Government declined to sign the Statement on Inclusive and Sustainable Artificial Intelligence at the Paris AI Summit. Signatories to the Declaration pledged to make AI “open, inclusive, transparent, ethical, safe, secure, and trustworthy”. I don’t think this directly impacts Social Housing too much but sets some of the trajectory for wider commercial developments.

Quietly, an Artificial Intelligence Playbook for the UK Government was published in Feb 2025. This report contains decent advice for AI in public services. In my view these also hold well for Social Housing providers. The paper is short and worth a read. Key considerations they outline:

- You know what AI is and what its limitations are.

- You use AI lawfully, ethically, and responsibly.

- You know how to use AI securely.

- You have meaningful human control at the right stage.

- You understand how to manage the AI life cycle.

- You use the right tool for the job.

- You are open and collaborative.

- You work with commercial colleagues from the start.

- You have the skills and expertise needed to implement and use AI.

- You use these principles alongside your organisation’s policies and have the right assurance in place.

The EU AI Act came into force in Feb 2025: This is a consideration for Housing, in that this thinking pattern may be adopted in the UK. It is quite large, to the extent it has its own navigation software for all the chapters, annexes, and recitals. An important (and in my view sensible) thing is having different risk levels with differing scrutiny required.

I’ll just call out one level: “Unacceptable risk, therefore prohibited, examples include the use of real-time remote biometric identification in public spaces or social scoring systems, as well as the use of subliminal influencing techniques which exploit vulnerabilities of specific groups.”. Housing providers need to act ethically and responsibly, and use of data systems including AI is no exception.

Artificial Intelligence (Regulation) Bill (2025), a private member’s bill passed first reading in the House of Lords 2 weeks ago. It seems unlikely to pass, but again may signal the future trajectory for AI regulation. According to Kennedy’s, the Bill suggests:

- Creation of an AI Authority: the Bill proposes the establishment of a dedicated regulatory body tasked with overseeing AI compliance and coordinating with sector-specific regulators.

- Regulatory Principles: The Bill enshrines the Five AI principles, derived from the UK government’s March 2023 white paper, “A Pro-Innovation Approach to AI Regulation.”

- Public Engagement and AI Ethics: The Bill highlights the need for public consultation regarding AI risks and transparency in third-party data usage, including requirements for obtaining informed consent when using AI training datasets.

Final thoughts: Don’t forget, we still have GDPR. Compliance with GDPR in all data systems remains a requirement. In addition, the risk of GDPR breaches has definitely increased as a result of genAI. Anecdotally, we’ve seen things like customer information being emailed out to personal accounts because ChatGPT is blocked on the network. Firming up on GDPR and on Cyber Security is always important. Keeping abreast of the latest regulatory and ethical considerations is also a good idea at this time, whilst also better understanding and achieving benefits from the tools we now have access to.

Written by a human who is not a lawyer. This is not legal advice.

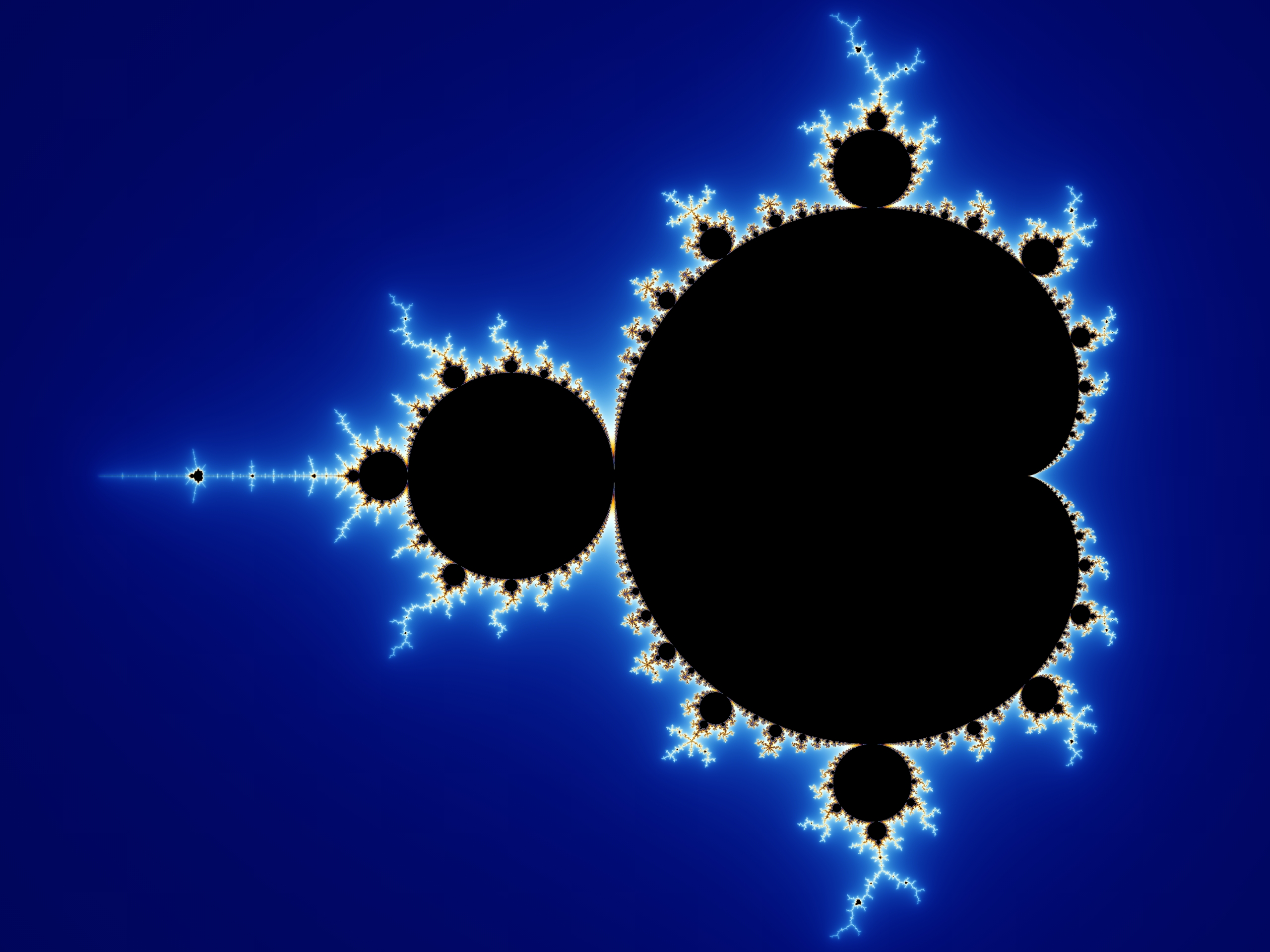

is bounded. These z are complex numbers, which we’ll ignore for now. It is much easier to understand if we look at some examples:

is bounded. These z are complex numbers, which we’ll ignore for now. It is much easier to understand if we look at some examples: