I’ve been running a “lean” business for 6 months now, and I’ve noticed that Lean Manufacturing principles applied to software development could lead to bad business. Let me explain:

Primarily, my concern centers around the lean manufacturing principle of waste reduction. The constant strive to reduce waste makes sense in an industrial production line, but does it make sense in a startup or exploratory environment?

An example

Let’s say there are 2 possible features you can work on. Feature 1 has a 90% chance of delivering £1 value, and Feature 2 has 10% chance of delivering £100 value.

Let’s say that the features take the same time to develop. From the maths,

Feature 1 “value” = 90% of £1 = £0.90

Feature 2 “value” = 10% of £100 = £10

And yet even with this understanding, because of the implicit risk and waste aversion of lean, we would say “there’s a 90% chance Feature 2 will be wasteful, whereas Feature 1 is sure to not be wasteful, therefore Feature 1 is a better idea”.

Good outcomes, but not as good as they could be

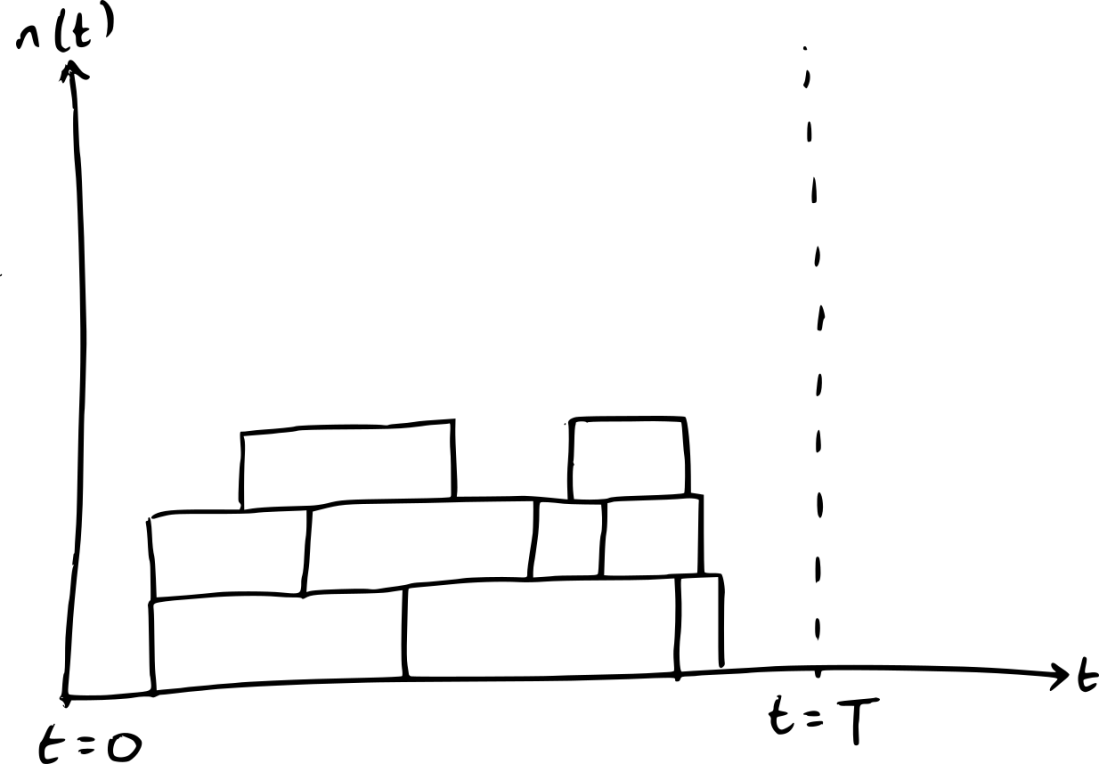

The waste reduction aspect of lean manufacturing gives us a local optimisation, much like gradient descent. Imagine a ball on a hill, which will roll downhill to find the bottom. This is ok, and it will find a bottom (of the valley), but maybe not the bottom (of the world, maybe the Mariana trench). In that sense it is locally good, but not globally optimal.

The way mathematicians sometimes get round this is by repeatedly randomly starting the ball in different places: think a large variety of lat-longs. Then you save those results and take the best one. That way you are more likely to have found a global optimum.

So I’m wondering about whether this kind of random restarting makes sense in a startup world too. I guess we do see it in things like Google’s acquisitions of startups, Project Loon, etc. Perhaps we/I should be doing more off-the-wall things.

Closing commentary

Perhaps it isn’t so odd that Lean Manufacturing has “reduce waste” as a principle… In a production line environment, reduction of waste is the same as increasing value.

Still, if the optimisation problem is “maximise value” this leads to different outcomes than “minimise waste”. I would argue we should, in almost every case, be focusing on maximising value instead.

As we’ve seen with following the rituals rather than the philosophy and mindset of agile, it is beneficial to actually think about what we’re doing rather than applying things without understanding.

Comments below please, I know this may be a bit controversial…

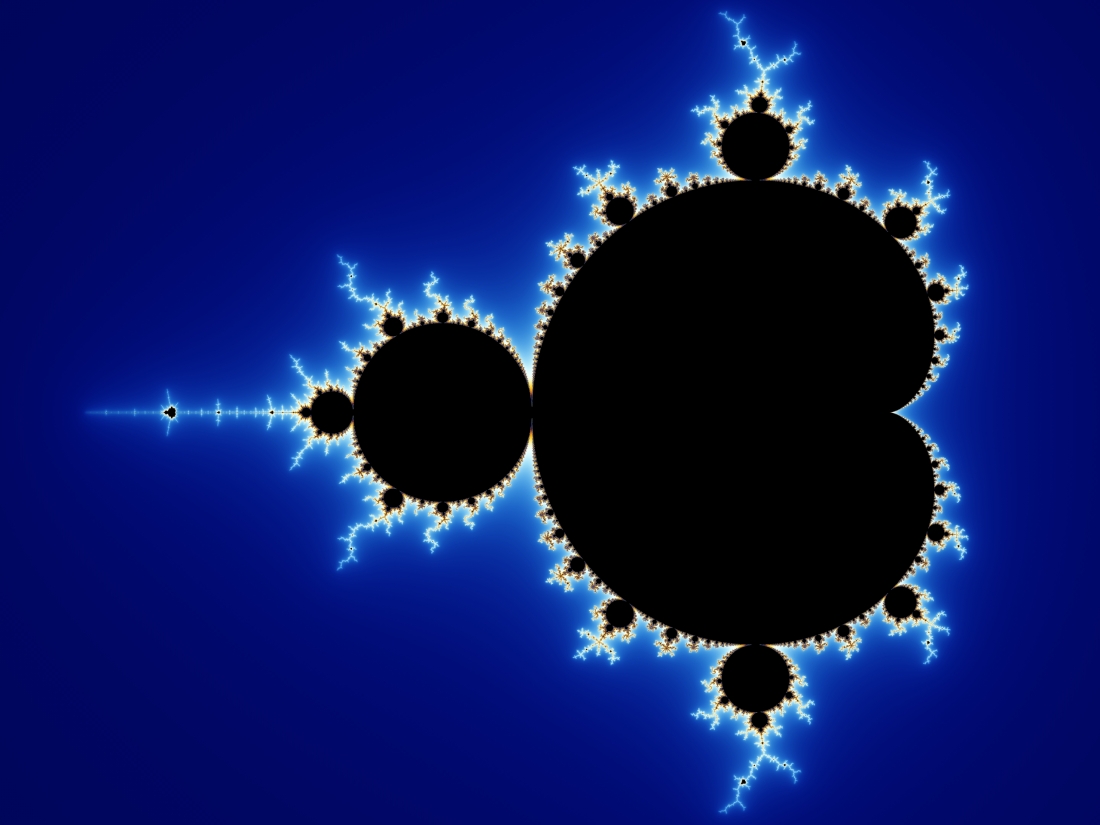

is bounded. These z are complex numbers, which we’ll ignore for now. It is much easier to understand if we look at some examples:

is bounded. These z are complex numbers, which we’ll ignore for now. It is much easier to understand if we look at some examples: